Text by: Tian Xu – Department of Computer Science & Technology, University of Cambridge; Hatice Gunes – Department of Computer Science & Technology, University of Cambridge

A key module of WAOW Tool is facial affect analysis. Understanding facial affect is very important as this can potentially reveal information about what people feel and what their intentions are. Facial affect analysis can be performed by recognising the facial expressions of emotion, by predicting the valence and the arousal value of the displayed facial expression and by detecting the activated facial action units.

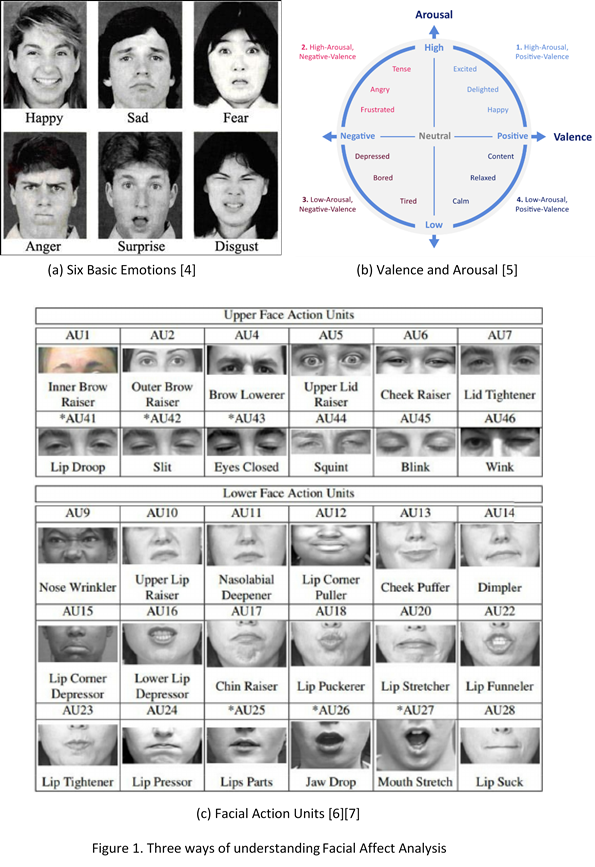

There are different ways to distinguish one facial expression from another. The most common way is to describe the expression as discrete categories. Paul Ekman and his colleagues proposed that there are six basic emotion categories of facial expressions (i.e. happiness, surprise, fear, disgust, anger and sadness) each with particular configurations, recognised universally [1] (Figure 1(a)).

The basic emotion theory has been widely used for automatic analysis of facial expressions but has received criticism because basic emotions cannot explain the full range of facial expressions in naturalistic settings. Therefore, a number of researchers advocated the use of dimensional description of human affect based on the hypothesis that each affective state represents a bipolar entity being part of the same continuum. The proposed polars are arousal (relaxed vs. aroused) and valence (pleasant vs. unpleasant) [2] (Figure 1(b)).

Another way to describe and analyse facial affect is by using the Facial Action Coding System (FACS), which is a taxonomy of human facial expressions [3] in the form of Action Units (AUs) (Figure 1(c)). Action Units are the fundamental actions of individual muscles or groups of muscles. Since any facial expression results from the activation of a set of facial muscles, every possible facial expression can be comprehensively described as a combination of Action Units (AUs) – e.g., happiness can be described as a combination of pulling the lip corners up (AU 12) and raising the cheeks (AU 6).

Within the WorkingAge project, these analyses will be achieved in real-time by focussing on the design and evaluation of deep learning based frameworks for facial affect analysis. We will describe how our Facial Affect Analysis module works and how it is linked to the overall WAOW system in next posts. Watch this space!

References:

[1] Ekman, Paul. “Darwin, deception, and facial expression.” Annals of the New York Academy of Sciences 1000, no. 1 (2003): 205-221.

[2] Posner, Jonathan, James A. Russell, and Bradley S. Peterson. “The circumplex model of affect: An integrative approach to affective neuroscience, cognitive development, and psychopathology.” Development and psychopathology 17, no. 3 (2005): 715-734.

[3] Ekman, Paul, and Wallace V. Friesen. “Facial action coding system: A Technique for the Measurement of Facial Movement.” Palo Alto: Consulting Psychologists Press, (1978).

[4] Source: https://www.calmwithyoga.com/the-functions-of-emotions-emotional-well-being/

[5] Source: https://www.powerpost.digital/insights/why-emotions-are-crucial-in-brand-publishing/

[6] Tian, Y-I., Takeo Kanade, and Jeffrey F. Cohn. “Recognizing action units for facial expression analysis.” IEEE Transactions on pattern analysis and machine intelligence 23, no. 2 (2001): 97-115.

[7] Kanade, Takeo, Jeffrey F. Cohn, and Yingli Tian. “Comprehensive database for facial expression analysis.” In Proceedings Fourth IEEE International Conference on Automatic Face and Gesture Recognition (Cat. No. PR00580), pp. 46-53. IEEE, 2000.