Text by: Aris Bonanos – EXUS

EXUS recently completed the in-lab testing of its Facial Recognition and Authentication and Gesture Based Interaction platform, as developed for the WorkingAge project. Despite complications arising from the COVID-19 pandemic involving limitations on the number of personnel allowed to be present simultaneously in our offices and restrictions on movement within the city, we “recruited” 10 people to run the system through its paces and discover how easy (or hard!) they found it to learn and interact with it.

First, a few words about the system itself. The component allows registered users to interact with the WorkingAge Tool with hand gestures. A user registers simply by uploading their selfie, a step requested when registering on the mobile app. For our in-lab tests, users provided their profile picture from the company online registry. Any gesture can then be programmed to correspond to a defined interaction with the system. The gestures could be enumeration with fingers, the “thumbs up” or “ok” gestures, or any other combination desired. Such an interaction platform has a wide range of applicability, from enabling non-verbal communication for law enforcement agents, a means to digitize and automatically record gestures performed by referees in sporting events, to workers interacting with a platform designed to improve their health, as in the WorkingAge project. To achieve system functionality, a suite of Artificial Intelligence and Machine Learning techniques was implemented, relying primarily on deep learning employing convolutional neural networks.

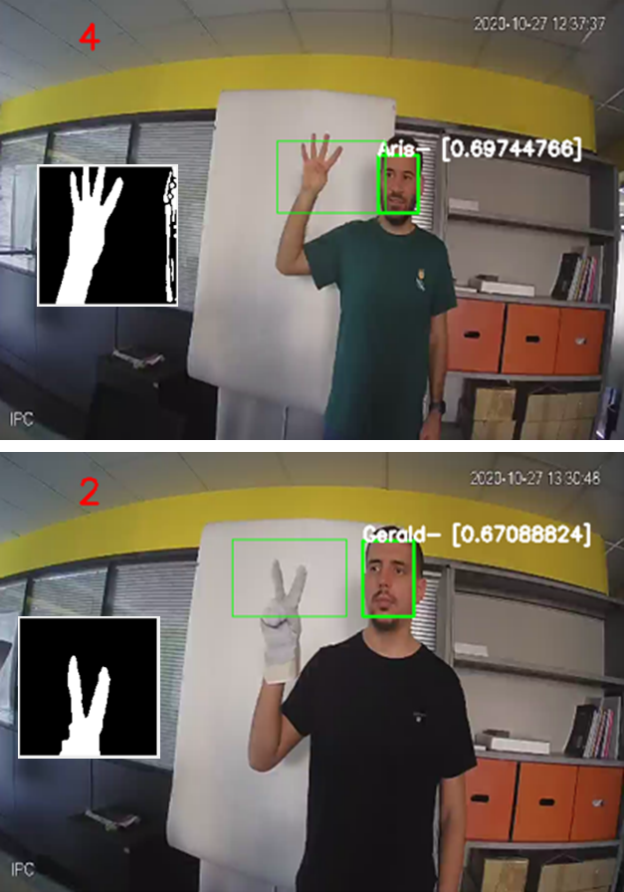

Through our in-lab tests and the experience of our participants, the users found the gesture-based interaction system intuitive and easy to use. Sample system output from two users is shown in the picture. A “similarity score” is returned for each user; here results of 67-69% are achieved, which are sufficient to correctly identify and authenticate the user and expected values based on the user-camera distance. Also shown is the enumeration gesture value the system recognized, correctly identifying the gesture in each case. The tests revealed some surprises as well, namely that the system worked better with a solid (white) background, and that it had difficulty correctly identifying gestures 4 and 5. This valuable feedback will be used to improve the system in future iterations.

We had a very pleasant experience testing our system on real users in our controlled environment, and with the in-lab tests of all partners nearing completion, we are looking forward to undertaking the integration of all components into the complete WorkingAge Tool prototype!