Text by: Vincenzo Scotti – POLiMI

In this blog post, we present the voice analysis tool developed by PoliMI that enables emotion recognition through voice in the WorkingAge Application. In the actual WAOW tool, our system works together with that of Audeering to achieve the best performances.

The human voice delivers lots of important information besides mere words. Thus, we can extract verbal and non-verbal clues from the speech and use these clues to identify one’s emotional status. Verbal clues are mostly related to the actual meaning of the sentence; for example, when someone says “I’m happy”, we can detect the status of happiness from the literal meaning of the sentence. Non-verbal clues instead regard all the remaining part of information perceived from speech; for example, a loud voice is usually associated with anger.

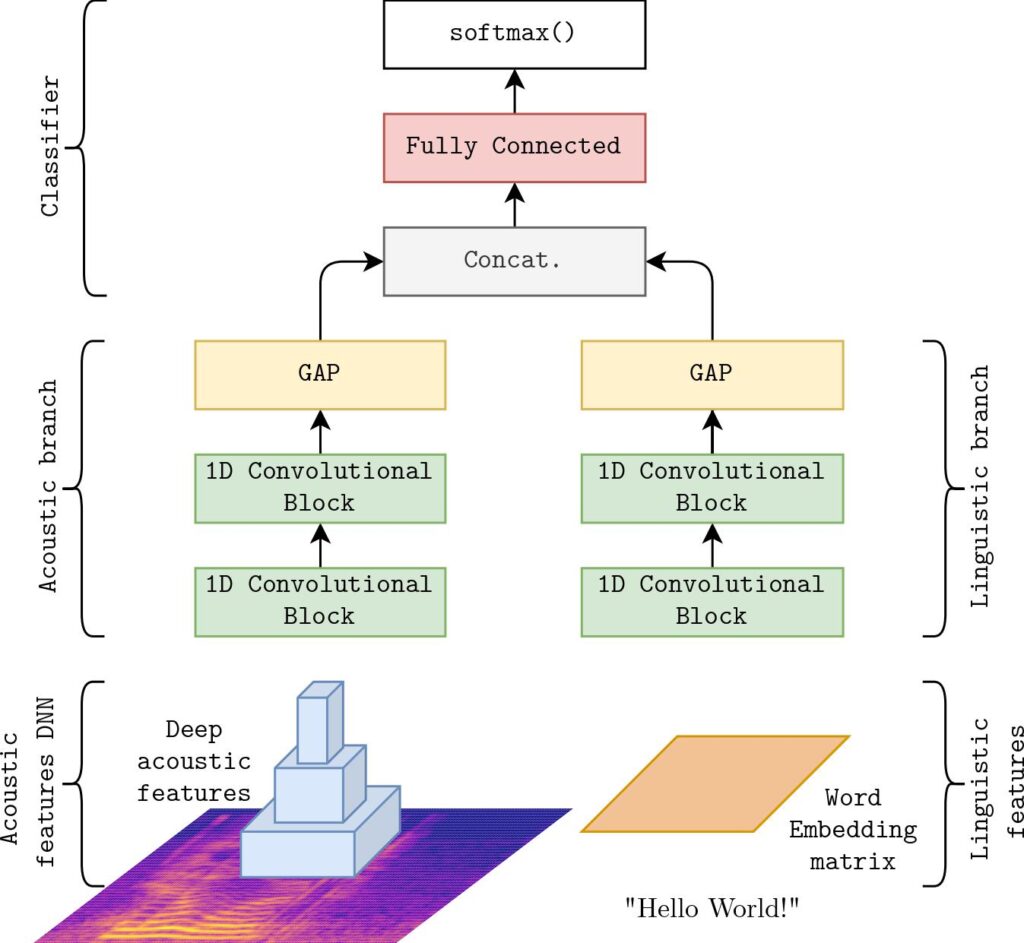

Here at PoliMI, we leverage speech analysis and artificial neural networks to carry out multimodal emotion recognition. Multimodal emotion recognition means analysing multiple input modalities (like audio, video, text, etc.) to carry out a more robust recognition. In our case, we leverage, as input modalities, the audio signal of the speech and the text of the speech transcription. We generate the transcription automatically using an additional piece of software. Our neural network, called PATHOSnet (Parallel, Audio-Textual, Hybrid Organisation for emotionS network) and depicted in the picture, takes these two inputs and outputs the emotion it perceives with higher confidence. We tested PATHOSnet on various languages during our experiments: English, Spanish, Greek and Italian, obtaining awe-inspiring results in many cases.

During the last days, we released the code of our model publicly on the Git-Hub platform; you can see it at the following link: https://github.com/vincenzo-scotti/workingage_voice_service